Image by: Charles Taylor, ©2019 Getty Images

Transparency is an important topic for any company looking to implement or use artificial intelligence (AI) systems. This is because some AI systems are essentially black boxes—you have no idea how they came to a decision—while others are more transparent and explainable. Many enterprise buyers want a reasonable level of transparency in their AI systems, but most AI developers only pay lip service to this requirement.

Today, organizations can use AI systems to improve their business operations, such as automating manual processes or creating ever-more personalized marketing campaigns. There are situations where it's not that important to know how a decision was made (such as reading/classifying documents or deploying automatic advertisements) as long as the system is working properly. We trust AI to do a good job, and with a little help from humans, it often exceeds our expectations.

However, there are times when the decisions of an AI system can have a major impact on an individual or a business. For example, think about if an AI system could decide whether we get a home loan or not or if a prisoner is paroled or not. In both cases, one would assume that if we disagree with the decision, we should have the right and the ability to understand exactly why the disputed decision was made.

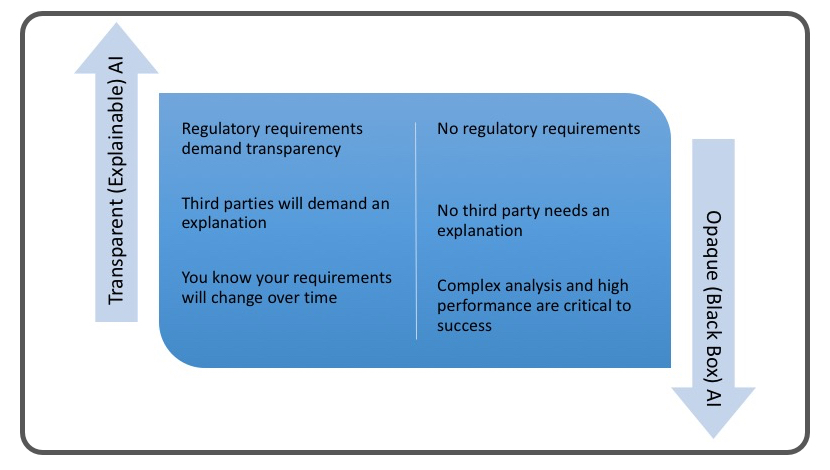

In an ideal world, AI should be transparent rather than opaque (i.e., a black box). Everything an AI system does should be explainable, but it's not as simple as that. You will find there will be trade-offs to be made. For example, deep learning and neural network AI systems can undertake the most complex of tasks and analyses and still deliver high performance. Conversely, they are also the most difficult—some might say impossible—to unravel or to explain how they made their decisions. In contrast, using more explainable and transparent AI models means simpler AI systems that will not perform so impressively.

Explainable AI vs Black Box of AI

©2019 Deep Analysis

Furthermore, if we have open and explainable AI systems, you are also potentially providing an opportunity for bad actors to reverse engineer and more readily hack into your AI models. In fact, some experts go further and suggest that explainable AI may actually make our systems weaker. Maybe that's true; maybe it’s not. As with so many of these cases, it all depends on what you do with your AI systems and how you manage them over time.

Another key reason why organizations might need explainable AI systems is because current (and, most certainly, future) regulatory requirements demand it. Regulations, like the Equal Credit Opportunity Act in the US or the European Union's "right to explanation," protect against discrimination and allow for the opportunity to explain why critical decisions were made. They also ensure the right to redress any bad decisions.

To put it another way, if you or your organization were accused of violating such regulations, then you would need to prove that you were not, in fact, violating said regulation. This is something to consider carefully when choosing your AI system design.

Another reason for explainability might simply be to gain executive sponsorship and support for your AI work. Quite understandably, executives are always going to be more likely to agree to the recommendations of AI systems if they can be explained in business terms that they understand.

Finally, you may need to consider the fact that any AI system will change and adapt over time. Naturally, you will want to improve your models and address or avoid problems, like spurious correlations (i.e., imagining relationships and patterns where none exist), that may arise. To introduce new domain knowledge for enhancing your models, you will need to understand how the model is constructed and operates currently.

We have talked about the series of trade-offs that you will find in your AI work, but there is another tug of war you will almost certainly encounter. Did you guess yet? Of course, it’s the seemingly eternal battle between information technology (IT) and the business. When it comes to whether AI should be explainable or not, you have these two groups with divergent views.

To be honest, AI engineers today may not be putting much of an effort to make these systems explainable. Often, they mistakenly perceive that explainability doesn’t help them and that they may have to give up performance gains. It requires them to document their work more accurately—not something they are really known to be keen on.

Certainly, explainable AI helps users and managers of these systems, showing them how to explain the model with third parties, identify blind spots, and to generally ensure everything is working properly. Explainable AI also serves the interests of engineers as well, as it will help them better understand the underlying dynamics of the systems they’re building and ultimately drive better adoption.

Think about how this may play out in your own AI project. How you will implement AI? What tools and approaches will you take? How will you balance the valid, yet dueling, positions between AI developers and users.

It’s something that needs to be considered very carefully at the start of your AI project, not when it's too late. If you make the wrong decision, it may prove costly.

Alan Pelz-Sharpe is the Founder and Principal Analyst of Deep Analysis, an independent technology research firm focused on next-generation information management. He has over 25 years of experience in the information technology (IT) industry working with a wide variety of end user organizations and vendors. Follow him on Twitter @alan_pelzsharpe.

Kashyap Kompella is the Founder and CEO of RPA2AI, a global industry analyst firm focusing on automation and artificial intelligence. Kashyap has 20 years of experience as a hands-on technologist, industry analyst, and management consultant. Follow him on Twitter @kashyapkompella.

![GettyImages-1211616422-[Converted]](https://cms-static.wehaacdn.com/documentmedia-com/images/GettyImages-1211616422--Converted-.2413.widea.0.jpg)